We have stepped into a world where machines are not just tools; they are our smart helpers in solving complex problems. Whether it is predicting the weather or recommending products online, machine learning models help us to make better decisions.

However, sometimes these models are a bit confusing, as when we input data, and they give us results, but we often don't know how they reached specific answers or conclusions. This is where model interpretability tools are useful. These tools help us understand how a machine learning model works and how it makes its decisions. Let's understand what these tools are and why they are important.

What is Model Interpretability?

Interpretability means the ability to tell how a machine learning model reaches or makes decisions. If one is using a machine learning model to solve some problem, that person should know why that particular prediction has been made. This is important in fields such as healthcare and finance.

For example, if a healthcare model says that a patient is suffering from some specific disease, we should be able to understand why the model says this. If the model is unable to give reasons for the same, then it is hard to trust it.

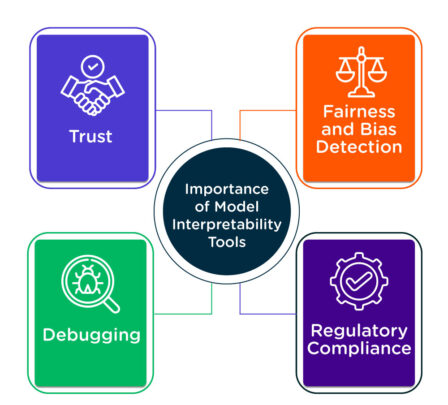

Why Are Model Interpretability Tools Important?

Model interpretability tools are important for several reasons:

-

- Trust: It increases the possibility of trusting what output a model gives if we are aware of what a model actually does. In domains such as law or healthcare, if we understand how a model arrives at the decisions it is making, trust is built even further.

- Debugging: If a model begins making unexpected or wrong predictions, we understand through interpretability tools where the model is going wrong and fix that.

- Fairness and Bias Detection: Models can make biased predictions because of the data they were trained on. Tools help us check if a model is fair by showing how it makes decisions. If we find bias, we can fix it by retraining the model with more balanced data. For example, if a loan approval model favors men over women, we can adjust the training data to ensure fairness.

- Regulatory Compliance: There are certain laws and regulations that mandate that in some industries, machine learning models are interpretable. For example, financial institutions may need to explain why a loan application was denied. Interpretability tools help to meet these requirements.

Types of Model Interpretability Tools

There are various tools and methods available that make machine learning models more interpretable. Generally, they are categorized into two categories: intrinsic interpretability and post-hoc interpretability.

1. Intrinsic Interpretability

Some models of machine learning are more interpretable than others. For instance, a simple linear regression or decision tree model is easy to understand. In a decision tree, each decision is based on a feature of the data. You can follow the path to see how the final decision was made.

These are intrinsically interpretable models as their structure makes it easy to understand how they produce predictions. On the other hand, more complex models such as deep learning or random forests might not be easily interpretable, and that is where post-hoc interpretability is applied.

2. Post-Hoc Interpretability

They apply to understanding the more complex models after they have been trained. It analyzes the behavior of the model and provides explanations for their decisions. Popular post-hoc model interpretability tools include:

-

- LIME: LIME is an abbreviation of Local Interpretable Model-Agnostic Explanations, a tool explaining the predictions made by any machine learning model, assumed as a simpler model, which would be interpretable to some extent for that particular prediction. Thus, if you use a highly complex model, LIME gives you an easy explanation of how the predictions have been made.

- SHAP: SHAP values are based on game theory. They tell how much a particular feature contributes to a certain prediction. This is useful for knowing which features contributed the most to making the decision.

- Partial Dependence Plots (PDP): PDPs show how a feature relates to the predicted outcome. They help us see how changes in that feature affect the model's prediction.

- Saliency Maps (for Deep Learning Models): Saliency maps help us see which part of an image influences predictions in deep learning, especially in image classification tasks. For example, in medical image analysis, a saliency map can point out areas of an image that the model focuses on when diagnosing a disease.

- Feature importance: Feature importance measures the ability of features that can make some form of accurate prediction. Thus, it reveals which features are truly driving models toward the making of that prediction.

How do These Tools Help in Real Life?

Suppose you are a doctor who wants to use a machine learning model to predict whether a patient is at risk of developing a certain disease. You would want to make sure the model was not only accurate but also trustworthy. You can understand which features (age, medical history, etc.) are most important in predicting the risk by using tools like SHAP or LIME.

Similarly, in finance, banks use machine learning models to decide whether a person qualifies for a loan. These models have to be interpretable so that the decisions made are fair and customers can understand why they were approved or denied. Model interpretability tools allow the bank to explain the reasoning behind the loan decisions, which is important for customer trust and regulatory compliance.

Contemplating the Future of Responsible AI and Machine Learning

Model interpretability tools in machine learning are hugely important for making complex models understandable and transparent. These tools help in making trusting decisions by using machine learning models, finding errors, being fair, and adhering to regulatory compliance. These tools will increasingly become important with the increasing aspects of life where machine learning plays a role in making better decisions. Understanding the workings of the machine learning model is essential in using it responsibly and effectively.

For more such informative blogs, visit WisdomPlexus!

FAQs

1. What is model interpretability in machine learning?

Ans: Model interpretability in machine learning is defined as the understanding of how a model makes its predictions or decisions. It helps us to know which factors the model is considering and why it reaches a particular outcome.

2. What are the metrics for model interpretability?

Ans: Metrics for model interpretability are feature importance, showing which features influence the prediction the most, and explanation methods such as SHAP or LIME, which clearly show why a model has been decided.

3. How to measure model interpretability?

Ans: Model interpretability can be measured by seeing whether it is easy to understand how the model makes decisions, whether explanations are clear and accurate, alongside evaluating if its behavior is in accordance with human reasoning.

Recommended For You:

Benefits of Machine Learning in ERP

What is an Epoch in Machine Learning? What is the epoch used for?