Imagine walking into a supermarket. You notice that people who buy bread are picking up butter too, or some who are purchasing diapers frequently add baby wipes to their carts. These patterns are not random; they reveal hidden connections in the shopping behavior of consumers.

But how do stores discover these associations? That is where the Apriori Algorithm comes in. It is a method that helps to identify frequent itemsets and association rules efficiently. Originally developed for market basket analysis, this algorithm has come to be applied extensively across retail, healthcare, finance, and other. Its ability to find relationships between items makes it valuable for decision-making. Let’s understand this data mining technique.

What is the Apriori Algorithm?

The Apriori Algorithm is a classic data mining technique used to find frequent itemsets in transactional databases. It follows the simple principle that if an itemset is frequent, all of its subsets should be frequent, too.

For example, if people tend to purchase (bread and butter) together, the algorithm picks this pattern and enables businesses to maximize product placements or suggestions.

What is the Apriori Algorithm in Data Mining, and How Does It Work?

The apriori algorithm is widely used in data mining to identify buying patterns in retail, detect fraud in banking transactions, and recommend products or content (e.g., Netflix, Amazon).

Example: Supermarket Sales Analysis

Suppose a store has the following transactions:

| Transaction | Items Purchased |

| 1 | Milk, Bread, Butter |

| 2 | Bread, eggs |

| 3 | Bread, Jam |

| 4 | Bread, Butter, Jam |

| 5 | Milk, Bread |

Steps in the Apriori Algorithm

The algorithm follows a structured process:

Step 1: Set minimum support threshold = 40%

-

- It tells how frequently an itemset should appear so that it is considered to be relevant.

- Frequent 1-itemsets: Bread (4/5), Butter (3/5), Milk (2/5).

Step 2: Scan the Dataset

-

- Count occurrences of single items (1-itemsets).

Step 3: Filter Frequent Itemsets

-

- Keep only itemsets that meet the minimum support.

Step 4: Generate Candidate Itemsets

-

- Combine frequent itemsets to form larger sets (e.g., pairs, triplets).

Generate 2 – Itemsets

-

- 9Bread, butter) - 3/5 (kept).

- (Bread, Milk) - 2/5 (kept).

- (Butter, Milk) - 1/5 (discarded).

Step 5: Repeat Until No New Frequent Itemsets Are Found

Continue expanding and filtering until no more frequent patterns are detected.

Step 6: Generate Association Rules

Extract meaningful rules (e.g., “Bread-Butter with 75% confidence).

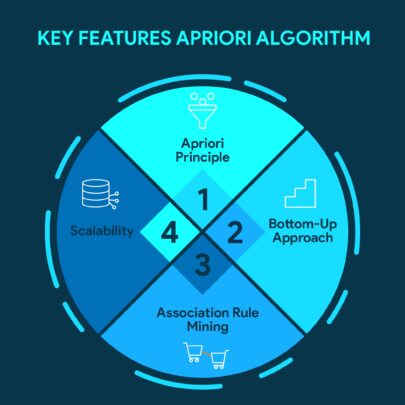

Key Features of Apriori Algorithm

-

- Bottom-Up Approach: Starts with single items and expands to larger itemsets.

- Apriori Principle: Eliminates unlikely itemsets early, improving efficiency.

- Scalability: Works well with large datasets.

- Association Rule Mining: Generates rules like, ‘If X is bought, then Y is likely bought.’

Applications of Apriori Algorithm-

-

- Retail & E-commerce: Market basket analysis (e.g., Amazon’s ‘Frequently Bought Together’).

- Healthcare: Identifying common symptoms or treatment patterns.

- Banking: Fraud detection by spotting unusual transaction pairs.

- Entertainment: Personalized recommendations (Netflix, Spotify).

Advantages and Disadvantages—

Advantages:

-

- Simple and easy to implement.

- Effective for finding hidden patterns.

- Works well with categorical data.

Disadvantages:

-

- Requires multiple dataset scans, slowing performance.

- Sensitive to support threshold settings.

- Struggles with very large datasets.

Use Cases of the Apriori Algorithm-

Retail: Optimizing Product Placements

The algorithm helps stores to identify frequently bought items (like chips and soda) based on purchase records. For instance, if the data indicates that 70% of people who purchase shampoo also purchase conditioner. The store can locate them together to increase sales. Walmart and Amazon implement this method for their “Frequently Bought Together” feature.

Healthcare: Predicting Disease Correlations

Hospitals apply the algorithm to patient records to find common symptom/drug combinations. For instance, if most diabetes patients are also prescribed heart medication, doctors can expect complications. This helps in early intervention and personalized treatment plans.

Finance: Detecting Fraudulent Transactions

Banks analyze transaction patterns to spot unusual activities. When purchases on the credit card at a gas station (x) usually lead to expensive online purchases (Y) within a matter of minutes, this is flagged by the algorithm as likely fraud. PayPal uses a similar method to prevent scams.

Telecom: Analyzing Call Patterns

Mobile companies study call records to detect churn risks. If customers who call support (X) and then use roaming (Y) often cancel subscriptions, targeted retention offers can be sent. Vodafone has used this to reduce customer attrition by 15%.

Apriori Algorithm Metrics:

The algorithm relies on three metrics: support, confidence, and lift. These algorithm metrics help businesses to understand which product pairs are frequently bought together (support), How strongly products influence each other’s purchase (confidence), and whether the relationship is meaningful or just coincidental (lift).

1. Support: How Frequently an itemset appears

-

- It counts how many times the itemset has appeared in all transactions.

- Formula:

Support (X) = (Number of Transactions containing X) / (Total transactions)

-

- Example: If milk appears in 40 out of 100 transactions, Support (milk) = 0.4.

2. Confidence: Likelihood of Y appearing when X is bought.

-

- Shows the probability that itemset appears in all transactions.

- Formula:

Confidence (X-Y) = Support (together X & Y) / Support (X)

-

- Example: If (bread, butter) appears in 30 transactions and bread alone appears in 50, confidence (bread – butter) = 0.6.

3. Lift: Measures the strength of a rule

-

- Indicates how much more likely Y is bought with X compared to its normal purchase rate.

- Formula:

Lift (X-Y) = Confidence (X-Y) / Support

Interpretation:

Lift = 1: No relationship between X and Y.

Lift > 1: Positive correlation (likely bought together).

Lift < 1: negative correlation.

Combining Apriori with Other Techniques

To improve efficiency, the apriori algorithm can be integrated with:

-

- FP – Growth Algorithm: Faster for large datasets.

- Machine Learning Models: Enhances predictive accuracy.

- Parallel Computing: Speeds up Processing.

Methods to Improve Apriori Algorithm-

-

- Partitioning: Split data to reduce scans.

- Hash-based Techniques: Speed up candidate generation.

- Dynamic Support Thresholds: Adjust thresholds for better results.

- Sampling: Use smaller datasets for initial analysis.

Wrapping Up!

The apriori algorithm remains a fundamental tool in data mining, helping businesses uncover valuable insights from transactional data. Though it suffers limitations, incorporating it with contemporary methodologies will further improve its performance. This algorithm efficiently identifies frequently purchased item combinations by leveraging the apriori principle, which systematically reduces the search space by eliminating unlikely patterns early. Retailers use it to discover products often bought together (like chips and soda), while healthcare and finance sectors apply it to uncover treatment patterns or fraud signals.

Modern upgrades like FP-Growth (using tree structures) and parallel computing make it faster. By harnessing these capabilities, businesses transform raw data into actionable strategies, optimizing promotions, predicting trends, and making sharper decisions backed by real evidence.

To learn more, visit WisdomPlexus today!

FAQ

Q: What are algorithms in data mining?

Ans: Algorithms in data mining are step-by-step methods used to find patterns, trends, or useful information from large sets of data.

Q: Is apriori supervised or unsupervised?

Ans: Apriori is an unsupervised algorithm used to find frequent itemsets and associations in data, like finding which items are often bought together in a store.

Q: Which algorithms are used in decision trees?

Ans: Decision trees use algorithms like ID3, C4.5, and CART to split data into branches based on certain conditions, helping to make decisions or predictions.

Recommended For You:

Using Data Mining Techniques Practically: An Illustrative Demonstration

Navigating Challenges in Data Mining Strategies and Solutions